Contents

Overview

| Time (GMT+2) | Thu June 25 | Fri Jun 26 | Sat Jun 27 | Sun Jun 28 |

| 09:00 – 09:30 | 09:00 | 09:00 Computer Vision is a 3D Problem | ||

| 09:30 – 10:00 | 09:35 | 09:30 The Humanoid Open Competition Track | ||

| 09:45 | ||||

| 10:00 – 10:30 | ||||

| 10:30 – 11:00 | ||||

| 11:00 – 13:00 | Break | |||

| 13:00 – 13:30 | 13:00 Welcome | 13:00 | ||

| 13:30 – 14:00 | ||||

| 14:00 – 14:30 | ||||

| 14:30 – 15:00 | ||||

| 15:00 – 15:30 | 15:00 Welcome by Peter Stone | 15:00 Farewell & Closing | ||

| 15:15 Documentary: “RoboCup – robots can’t jump” | ||||

| 15:30 – 16:00 | ||||

Color-code:

| Presentation | |

| Workshop | |

| Lightning Talk | |

| Welcome & Closing |

Schedule in Google Calendar can be found here.

Thursday, June 25

Afternoon Session

| 13:00 – 13:10 | Welcome and Introduction by the Organizing Committee |

| 13:10 – 13:15 | Welcome by Daniel Polani, Trustee and Past President of the RoboCup Federation |

| 13:15 – 13:20 | Welcome by Minoru Asada, Founding Trustee of the RoboCup Federation |

| 13:20 – 13:30 | Overview of the program, technical remarks |

Slides:

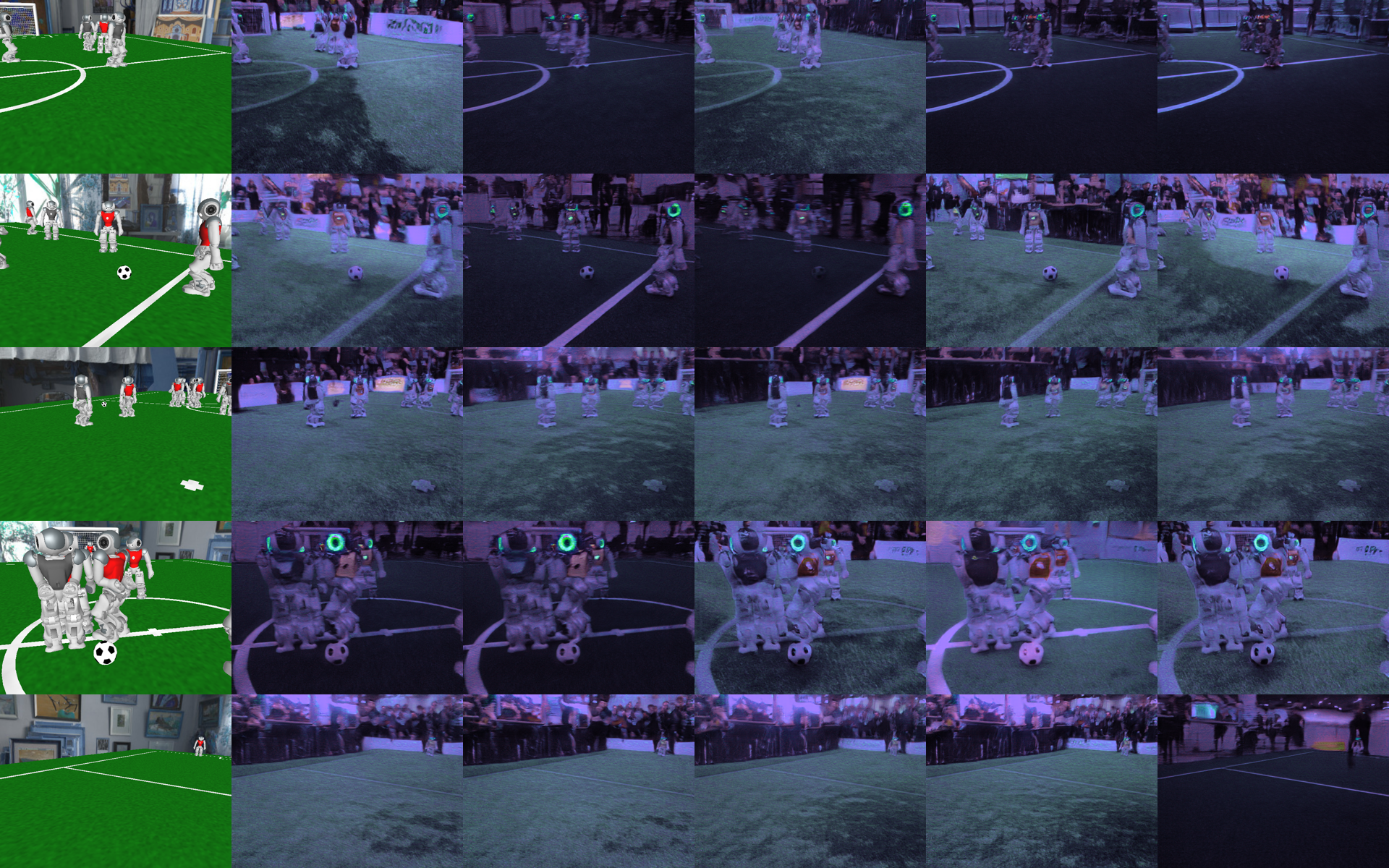

Abstract: Learning robot behaviors in simulation is faster, cheaper, and safer than learning in the real world. However, small inaccuracies in the simulator can lead to drastically different performance when simulator-learned policies are transferred to the real world. To close this reality gap, we present Grounded Action Transformation (GAT), an approach that improves the accuracy of the simulator without the need to modify the simulator directly. We show successes of GAT and related approaches. GAT successfully transfers a policy from the SimSpark simulator (used in 3D simulation league) to the Nao robot (used in SPL league), even outperforming policies hand-tuned for the Nao.

Peter Stone, the current president of the RoboCup Federation, will welcome participants to the Virtual RoboCup Humanoid Open Workshops.

Peter Stone

Abstract: In 2018, filmmaker Silvia Schmidt followed the BoldHearts to the Robocup in Montreal. Not knowing anything about the world of robot-football before, she was amazed to discover a whole new universe. Most importantly though: are the BoldHearts going to win?

Silvia will give a short introduction to her documentary before we will live-stream the movie.

Friday, June 26

Morning Session

Abstract: “Gretchen” is an experimental open humanoid robot aiming to be a versatile platform for education and research. The main focus of the project is to keep the robot as open and as low-cost as possible, making it accessible for students and researchers of many levels. At the same time the robot is aimed to be capable enough to provide a rich platform for research. One of our development goals for Gretchen is for the robot to be able to participate in RoboCup Humanoid league competitions. This is achieved through modular design of the robot, use of widely accessible components and manufacturing techniques, as well as extensive documentation and teaching materials. All hardware, software and documentation is developed as open source.

The robot Gretchen is currently in a prototype stage and resembles the lower part of the human body with 10 degrees of freedom. A complete robot is planed to have a height of about 110cm. Only widely accessible materials and electronic components, and manufacturing methods are used. Joints and drive parts are 3D-printed, limbs and the torso are laser cut from plywood. The joints are actuated indirectly through toothed belts which relieve stress on the motors. As one of the highlights, the robot is powered by low-cost servo-motors controlled by custom developed control boards, so-called Sensorimotor boards. The boards are completely open-source and can be used to drive a wide field of brushed DC motors and bring smart-servo features such as direct PWM control, various sensory feedback (position, current, voltage, temperature), RS485 bus communication and customizable firmware. A first version for an extensive documentation can be found in here.

Several prototypes of the robot “Gretchen” have already been produced and are under further development. The robot is also already being deployed in teaching, where students work and experiment with its design, actuators, 3D-printed components, firmware, power supply, communication bus, software libraries or the API.

In this talk, we will present the current state of the project Gretchen and some of the robots most interesting features in more detail. A part of the talk will be specifically dedicated to Sensorimotor boards.

Afternoon Session

Abstract: A new roadmap has been presented to the league here.

During this workshop, we will present and discuss the upcoming changes for the league.

This discussion will cover both, short-term rule changes and the long-term development of the league.

Some of the changes foreseen in the RoadMap draft have already been implemented in the 2020 rulebook. One of the most important among those was the reduction of size classes to two (Kid and Adult), without an overlap in height. The new maximum height for KidSize and minimum height for AdultSize robots is, however, still under discussion and in this workshop we would like to give an open space to gather arguments from the league about the new size division.

Teams have expressed the wish to see the frequency of rule updates reduced, which has been reflected in the RoadMap.

Given the fact that RoboCup2020 has been postponed, we will as well discuss

whether we postpone any major changes to the rules until Robocup2024 or if

we agree to have significant changes for RoboCup2023.

Saturday, June 27

Morning Session

Abstract: Motion generation and planning is tricky. There is a plethora of approaches to enable smart or intuitive motion generation for robots but a one-size-fits-all solution has yet to be found. This talk will not propose such a one-size-fits-all solution but will show how unusual(ish) interface semantics of smart actuators influence the motion generation semantics in my control software.

The off-the-shelf smart actuator usually wants to be controlled like so: “Tell me how far I shall move and how fast I should get there”. This always felt a bit odd as it renders generation of certain movements very cumbersome: Think about a trajectory starting at 0, going to 1 and then returning to 0. You’d tell the actuator to go to the final target value of 0 (as it happens, this is where it already is) but with a nonzero velocity. This apparent contradiction leads some “smart” actuators to not move at all – under certain conditions.

A nicer interface for smart actuators would be: “Give me a function with respect to time that I shall follow”. This function could be expressed as a polynomial and the above contradiction would be resolved. However, the semantics of this interface have great ramifications on motion planning and generation: The “classic” smart actuators require information about a future pose whereas my “unusual(ish)” actuators require information about the desired pose at the current point in time. As it turns out these different semantics allow for quite a big deal of simplifications and generalizations in least-squares inverse kinematics solvers. Furthermore, the polynomials which are required by the actuators can be generated surprisingly easily by performing a taylor expansion of the task functions at the current configuration.

My talk will cover the idea behind it all, the math involved and what it looks like on a robot.

Abstract: The RoboCup Humanoid Research Demonstration is an initiative for showcasing the latest research relevant for humanoid robotics. It is meant to give room for presenting and discussing research initiatives that are not (yet) ready to be applied in a RoboCup competition. Any research is welcome to be presented, as long as it is related to humanoid robotics and has the potential of a practical demonstration. This includes both hardware and software advancements, as well as education with and for humanoid robots.

During this short presentation, the Technical Committee will give an overview of the ideas behind the Humanoid Research Demonstration and the current plan for establishing this league for RoboCup 2021 in Bordeaux. There will then be time for questions, discussions and feedback on how we can successfully organize the first version of the Humanoid Research Demonstration next year.

Afternoon Session

To get the most out of the hands-on exercises, it is crucial to set up the docker-based environment at least one day in advance.

- Presentation: Introduction to ROS 2 (20 min)

- Set-up Humanoid 3-D simulation environment (10 min, Docker based environment prepared and installed by participants beforehand)

- Hands-on demonstration: ROS 2 basics – topics / services / parameters / visualization (30 min)

- Exercise 1: create a sense-act package (60 min)

- Exercise 2: advanced ROS 2 features, e.g. node composition, quality-of-service, node lifecycle (60 min)

Requirements:

Knowledge/experience level required:

- Linux command line basics

- Exercise 1: Python 3

- Exercise 2: C++ / CMake (enough to follow provided steps)

- No knowledge of or experience with ROS 1 or 2 required

Hardware/software required:

- Mid-level PC capable of running 3-D physics simulation

- Linux

- Git

- Docker

- ~5-6 GB free HD space.

All requirements will be provided in advance as a Docker environment with ROS 2 and demo packages installed. The setup for participants involves installing Docker and downloading the development environment, estimated to take 10-60min preparation time, depending on download speed. It is required to set up the development environment at least a day before the workshop so there is enough time to assist with any issues.

Instructions and bootstrap/cofiguratino files for downloading and test-running the environment will be provided at this Git repository. The issue functionality of GitLab.com can be used to report and resolve any problems with setting up the requirements before the workshop.

Sunday, June 28

Morning Session

Recording: Available on YouTube

Afternoon Session

This workshop aims to highlight the future challenges that will be posed for the robotics community to allow human players to play against robots in RoboCup in 2050. We will particularly focus on the factors of human-robot interaction that can affect and enhance people’s acceptance, sense of safety and comfort in close physical interactions with robots.

Authors should submit their papers formatted according to the IEEE two-column format. Use the following templates to create the paper and generate or export a PDF file: LaTeX or MS-Word. Organisers will look at the possibility of a joint publication of selected input papers, e.g. at the next RC Symposium. Authors can email their papers to Alessandra Rossi <alexarossi_AT_gmail.com>.

Presenter(s):